Data Engineering Fundamentals

Key Data Engineering Topics to Help Kickstart Your Career

Posted by Alejandro Rusi

on September 29, 2021 · 10 mins read

There is no clear path for starting a career in the exciting world of Data. Enthusiastic people who come from completely different backgrounds fall in love with the idea of working with it, and each start their journey in their own unique way. Some start with online courses, some follow an academic path and others start hacking away at their own personal projects or take part in Kaggle competitions.

Getting to work with people from such different backgrounds is awesome! It means that the world of Data is full of unique and fresh perspectives. But it also means that everyone has their own level of knowledge on different data-related tools and topics.

The topics we'll mention in this entry are not usually taught in online courses or in academic environments, and aren't as flashy as training a Machine Learning model or deploying the latest Big Data architecture. But they'll be a part of your day to day life if you work with Data.

We think these topics are so important that we make sure that everyone who joins Mutt knows them! Doesn't matter if they are Data Scientists, Data Engineers or Machine Learning specialists.

With this entry we'll help YOU start your journey to become a Data Developer. We'll focus on why we think these topics are interesting rather than explain them completely. We hope our introduction will serve as motivation for taking the first step in mastering them!

Version Control and Workflow

Have you ever wanted to go back to a previous version of your code?

Or maybe you lost all of your progress because your Hard Drive stopped working!

Version Control systems such as git take care of just that: they record changes to files over time, and can recall any old version anytime.

Adding Github or Gitlab into the mix makes it a Distributed Version Control system, meaning that this storyline of changes doesn't depend on your computer anymore and can be pulled from any device that has access to its repository.

Most if not all professional projects will have multiple collaborators writing, reading and reviewing code.

Without Version Control it just wouldn't be possible to have them all working together in the same project.

Well, maybe it would be possible but it would cause lots of headaches along the way.

That's why knowing a version control tool like git is key: it'll allow you to collaborate with anyone, anywhere.

Key Commands and Functionalities

We know that starting to learn git from scratch can be kind of overwhelming.

Lots of new concepts, commands and things that can go wrong!

It's OK to keep it simple at first. Here is an easy yet productive set of steps you should understand how to do:

- Obtain or create your repository: if the repository already exists you'll have to use

git cloneto clone it into your computer. If not, you'll have to create it from scratch. Personally, I find it easier to first create an empty repository in Gitlab or Github and then clone it into my machine. Now you can start locally changing the files inside the repository! - Change your files:: simply modify the files you'll be working on.

- Understand the changes you'll be introducing: it's important to know for sure that we're modifying the right thing.

git statuswill list the files created and modified, andgit diffwill show you each change you've introduced line-by-line. I can't begin to tell you how many times it helped me spotprintstatements I've introduced that would dirty up the code. - Add your changes: now you have to choose what changes you'll add.

git addall the files you'd like to push to your repository. Make sure togit addonly what's necessary! For example, there's no need to add log files or your IDE config files (adding a .gitignore would be a great idea to avoid this problem altogether). It's a good idea to re-rungit statusto make sure that you're adding the correct files, andgit diff --stagedto check their contents. - Commit and push: we're in the finish line: all that's left is to

git committo group our changes into a commit (don't forget to give it a meaningful message!) andgit pushto send our changes to the repository.

But wait, we just pushed directly to our main branch, right?

The Git Workflow

Using the commands we mentioned earlier to push directly to the main branch of your repository might work if you're a team of 1, but it'll start to cause headaches once your team starts to grow.

You'll have to be constantly using git pull to obtain the latest changes, each time resolving merge conflicts when more than one person modified the same file.

In which branch should we add our changes? Who is working on what? Should we always do a Code Review? Is the code we are adding "good enough"? What is running in production environments and what is not ready to be launched to the public yet?

This is what Git Workflows take care of: they define a sensible set of steps you and your teammates should take when collaborating using git.

At Mutt Data we use the Github Flow.

It allows us to always have our changes go through code review before merging, and to never push directly to the main branch (thankfully you can define protected branches in both Github and Gitlab).

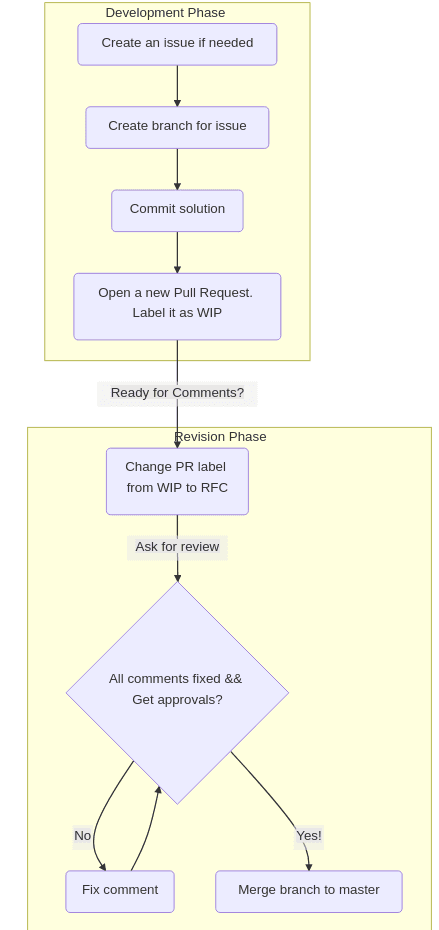

The steps we take when we start working on a new piece of code or feature are:

- Assign a Gitlab issue, or create it if it doesn't exist yet. We use issues to document what should be done and share useful context.

- Someone should create and push a new branch, with a declarative name.

- That person should immediately create a new Merge Request (also called Pull Requests in Github) to make what we are working on visible. We add the WIP prefix to its title to tell people it's still a Work In Progress.

- Once we think that our code is ready to be reviewed, we change the WIP prefix to RFC to Request For Comments and tag the code reviewers.

- Don't feel down if the reviewers ask for changes! It's normal that when more than one set of eyes read a piece of code for errors and bugs to arise. This is also a good learning opportunity, embrace it!

- Once everyone agrees that the Merge Request is done, it gets Approved and then merged.

It's important to be open, verbose and make your progress visible! And this is kind of an unwritten rule of every Git Workflow, but try to make your Pull Requests/Merge Requests as small as possible! More about that on this article by Michael Lynch.

Reproducible Development Environments

If you've ever downloaded a piece of Python code and ran pip install until it finally worked or you've ever uttered the infamous "but it works on my machine!" to your workmates, no need to worry, there are tools to make sure that this never happens again.

These tools will help set up your project not only for you, but for others as well. Their goal is the same: to easily create Reproducible Development Environments that can be started up with a few console commands (if these tools are good then one command should suffice) and that creates the same environment in any computer.

This means that it doesn't matter if you run it in your personal computer with python3.7 or in your friend's computer who has python3.9, the environment created will be the same.

Knowing how to build and work with Reproducible Development Environments will be key in helping you work with others and deploy it to productive environments.

Packaging and Dependency Management

Wouldn't it be neat if we could instantly install and run a project, instead of randomly installing its dependencies hoping for the best? And if the best somehow did happen and we were successful in installing this project it'll still exist in a fickle environment (our own computer) where things are constantly changing. Next time we install or uninstall something, who knows if the project will still work!

Most modern programming languages have Packaging and Dependency Management tools to help with this problem: Rust has cargo and javascript has npm.

At Mutt Data we use poetry for packaging and handling the dependencies of our Python projects.

There is no need to go over why we love poetry again when Pedro explained it so clearly in his entry.

Docker and docker-compose

Thanks to poetry we now have a neat way to package our project and you and your teammates can start development.

But if your aim is to release this package to a productive environment like a cloud platform then you'd have to do the same setup there.

What if the operating system offered by your cloud provider is substantially different from the one you use?

Can you still assure that everything will work as intended, just like in your machine?

Docker can help you with that. Docker allows you to package your software in containers. These containers build the software you want them to build by packaging all of their code, dependencies and even operating system. Sometimes it isn't even necessary to configure what your container needs yourself, DockerHub has tons of ready to run images with all the necessary things already installed and configured. And here is the best part: running your app via docker containers will be the same regardless of it running on your computer or on a remote server!

If you've never used Docker before, we recommend installing it and then running docker run -dp 80:80 docker/getting-started.

Now visit your http://localhost and follow its interactive tutorial!

We use Poetry and Docker together at Mutt Data.

We tend to use Poetry for its handy dependency management and ease for creating virtual environments in development.

It's also super handy to install your project as a package and try it in different places like a jupyter-notebook!

But when it's time to deploy to a productive environment we rely on Docker containers to make sure what runs in our machine will also run in production.

But that 's not all!

What if your application requires multiple services, like a database or a web server?

Instead of manually spinning up multiple Docker containers yourself, use docker-compose!

For example, check out our example docker-compose in our MLFlow entry.

Know your way around the console

A developer will spend most of their time in the Console.

We know, it's scary at first.

It's just a pitch black screen, its commands, and you.

An IDE or modern code editor might feel more comfortable, helping you along the way when you are developing.

And don't get us wrong, we think they are awesome!

But you'll have to face your demons sooner or later, some functionalities and commands are only available via the console and don't have a graphical counterpart.

And what happens when you connect to a remote instance using the ssh command?

There is no graphical interface available, just you, your keyboard and the dark screen.

So, what are some of the fundamental things you absolutely need to know?

- Move around directories with the

cdcommand, know where you are standing with thepwdcommand. - Creating empty files with

touch, moving files withmv, copying files withcp, removing them withrmand listing them withls. - And the last thing is: learn to look around for answers!

The

mancommand will bring up a complete and lengthy documentation of any command you don't understand (for exampleman lswill bring up the documentation onlsand the different way you can run it with flags).

Of course this is just the first step towards mastering the command-line. At Mutt Data we always recommend checking out the The Missing Semester of Your CS Education, an excellent course which will teach you all of the ways you can become more productive using these tools.

Be comfortable with your code editor or IDE

Of course you'll also spend lots of time writing and reading code. At Mutt Data each of us has their own favourite tool for this: VSCode, PyCharm, Vim or Emacs. We don't judge anyone for the tool they like to use to write code! But here is the catch: it's important that you are comfortable with the tool you've chosen.

What do we mean by comfortable? Knowing how to write, edit and read code with it isn't particularly hard. But you should take at least some time to learn how to use its hotkeys, plugins and possible configurations. We know it might seem boring at first (unless you are code editor fanatic!), but it will save you LOTS of time down the line.

And remember, no tool is strictly better than the other. It all depends on which one fits your needs better and how much productivity you can squeeze out of them. Don't use VSCode just because it's the most popular or Vim because it's the most "hardcore", instead use them because you like them and they make you productive!

The path forward

We hope that after reading this you are not completely scared or bored out of your mind! But remember: these are the tools and techniques you'll need to make the real interesting stuff happen! Sharing your work, collaborating with your teammates and being comfortable with your development environment will help you deliver the best and most exciting Data products. They'll be a part of your day to day life, even when training the most cutting edge Machine Learning model to building the most complex and massive Big Data architecture.

As a treat for reading the whole article... good news, we are hiring! It doesn't matter if you're just starting your path in the exciting world of Data or if you're a seasoned expert in these topics: we want to hear from you!

During your first weeks at Mutt Data, you'll go through the Mutt Academy. There you'll learn and put into practice all of these topics and more! If you already know all about these fundamentals that's cool too: no need to go over them again. We always "custom fit" the content you learn at the Mutt Academy with your previous experience and interests in mind. Never used Airflow? Want to try out the newest DataOps tools? You'll have time to learn all about them in our Onboarding when you join Mutt Data!

You can read more about reasons to join our team in our article: 5 Reasons to join the Mutt Data Team and [APPLY HERE]({{ site.jobs_page }})!