Generating Usable Images with AI

5 Tips To Generate Usable GenAI Images

Posted by Pablo Lorenzatto

on January 3, 2024 · 8 mins read

Introduction

As we mentioned in our previous post, GenAI is unreliable. On top of relying on business rules to tame this issue, we can also attempt to improve the generation process in some sense.

In this post we introduce a couple of techniques and tricks to improve/detect problems, specifically for the case of image generation.

From a business perspective, making generative AI more reliable, as already mentioned, allows us to take different routes: opt-out instead of opt-in, less human involvement, etc. Moreover, it unlocks some applications that would not be possible under more unreliable models, such as automation.

To begin with our image generation process review, let’s start with the simplest setup: a machine runs a model and generates images.

Given this simple setup, our options are quite limited. We can either enhance the reliability of the model or we can select and tune the already generated images. In the following sections we present some approaches that address both of these aspects.

Caveat: The future is coming fast

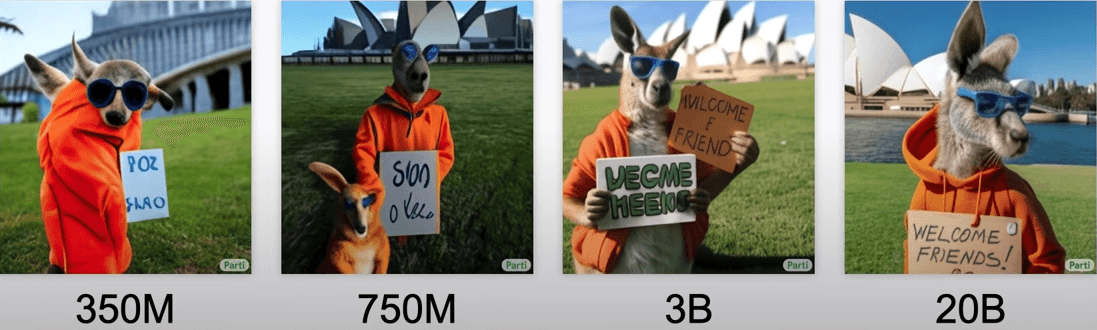

Before presenting these tricks, we need to address the elephant in the room. The future is coming… and it’s coming fast! There is a prevailing trend that bigger and better models are emerging left and right. Evidence indicates that larger models can overcome the problems and limitations previously found in smaller models.

Comparison of results from models with different numbers of parameters.

Therefore, with the landscape constantly changing, keep this in mind: besides the tips shared later, the key is to think creatively, stay updated and bring value to the next significant model release.

Tip 1: Use fine tuned models instead of vanilla ones

If you want to create images for a specific purpose, it's usually better to use a model that has been fine-tuned for a similar task rather than the original model.

There are various pre-trained models available online for different purposes like portraits or realism. It's a good idea to start with one of these if your task is closely related to those areas, it will likely provide higher-quality results.

If you have the resources, you can also fine-tune one yourself. What’s better than a model tailored to your needs? There are plenty of resources on how to do this, for example, this training document from hugging face or this beginners guide from OctoML.

Tip 2: Use LoRAs for Fine-Tuning When Datasets are Small

We have established that fine-tuning is an effective alternative when it comes to generating images for a specific problem. But what if your dataset is really small? Don't fret, you can use Low-Rank Adaptation.

It's cheaper than fully-fledged fine-tuning, takes less time, and can be done with very small datasets (≈ 10-200 range).

It's also great for 'teaching' specific concepts to models, guiding the generation into narrower, more predictable scope. If you're not sure where to start, Hugging Face has a great guide with scripts to train your LoRA so that you can plug-and-play.

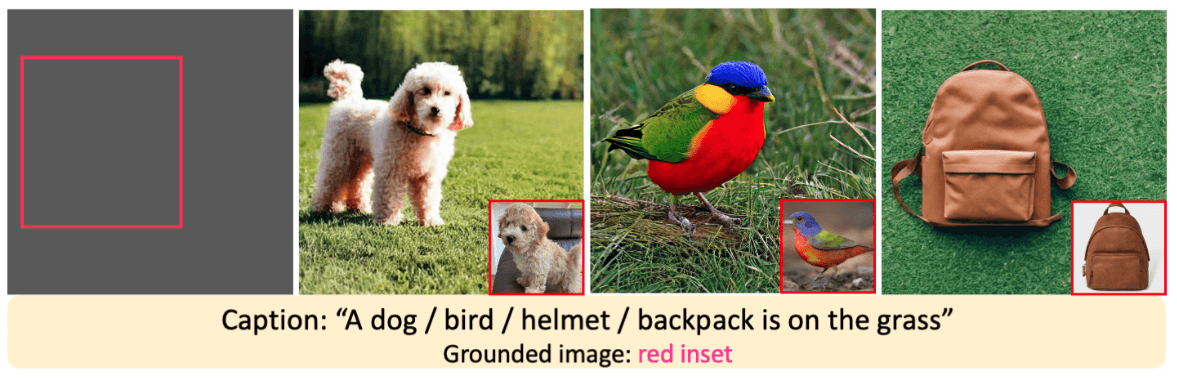

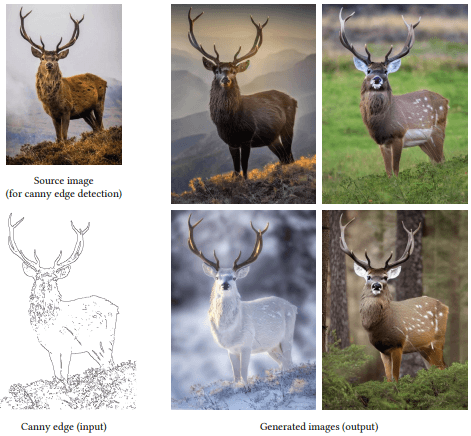

Tip 3: Use Text-To-Image Models Trained For Specific Tasks To Control Your Outputs

What do we mean by controlling your outputs? Well let’s bring it down to a business example, say you need to design an ad where a logo or item needs to be in a specific spot or you need a character to be in a specific pose. Essentially, an image that adheres to a specific structure or composition.

There are great text-to-image models that give you control over these variables and they’re trained for different specific tasks (you can even train your own, but… you need super large datasets).

Some models to look into: ControlNet, T2I Adapter, and Gligen.

Note that, while these tricks give you control over the output, they might reduce the quality of the results, in other words, images may look bad. (again depending on the underlying text to image model among other factors).

Tip 4: Consider What We Focus On In An Image

While not strictly used to improve generated images, saliency maps provide a powerful tool for assessing the attention placed on different elements within an image. This proves to be beneficial in various ways—by grasping where people focus, you can assign varying importance to errors in samples, identify optimal regions for incorporating elements, or decide the most suitable cropping direction.

Tip 5: Score and Filter

Last but not least, one of the most useful methods we found was to score generated images and classify error types. In this way, we could tackle each error type separately in a divide-and-conquer fashion, increasing the percentage of acceptable images, identifying patterns, and subsequently filtering out those we found unsuitable.

The easiest and most effective way we found to implement this approach was by making up simple heuristics. We examined several failure cases and determined whether there was a possibility to eliminate the most critical issues.

Below, we provide a few examples of heuristics from different use cases.

Heuristic 1: When generating images featuring people, small faces tend to look bad

We manually assessed about 600 faces and discovered a clear pattern: faces tend to have more mistakes when they are in a small area. Thus, filtering images containing small faces results to be a compelling strategy.

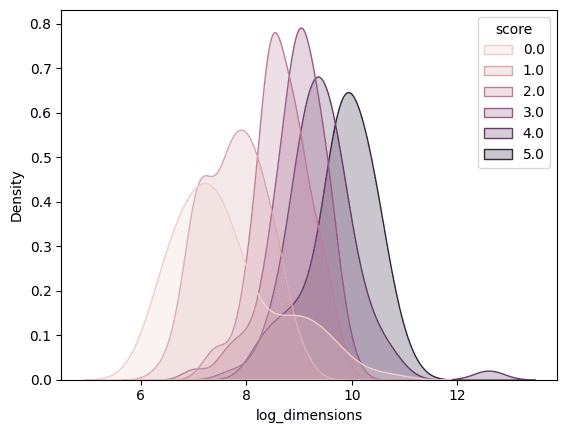

Density plot showing face generation quality (1 to 5) versus size (in log of pixel size), with higher scores for larger dimensions.

Heuristic 2: When generating images featuring people, face detector models are a good proxy

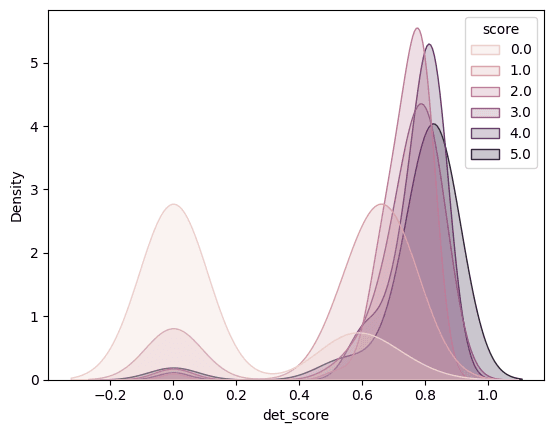

We also found that an off-the-shelf face detector’s score can serve as a straightforward metric for filtering bad images. Automatic face detectors seem to be less confident that the output is a face when the generated face has issues. So, naturally, we can leverage an out-of-the-box face detector model to maintain scores above a suitable threshold (depending on your specific use case). Below, there is a plot comparing the detector confidence distribution for different manually tagged images according to a human scorer.

So the worst offenders can easily be discarded with this technique. For images with higher scores the distributions are too close so this might not work for “almost right” samples.

Heuristic 3: When performing inpainting to generate people, simply check skin pixels

Where is the head? In a specific use case, we found ourselves needing to perform inpainting to complete a shirt picture with a human being. However, we soon discovered that some samples had a clear issue…

Sometimes generated samples had no head or arms! We implemented the most simple heuristic we could have come up with. Just check whether there are enough skin colored pixels in the region. If there are more than some desired threshold then assume its a head/arm. This very simple trick managed to eliminate a good chunk of bad samples.

Heuristic 4: Probing images for strange semantics with multi-modal models

A generated image can be wrong for a number of reasons, one common issue is the portrayal of impossibilities: facial abnormalities or objects seamlessly merging into one another. But images can also be wrong because they're not aligned with our human principles and comprehension of the world. To illustrate, consider the image below:

Everything going on in this picture is physically possible, but we’re immediately struck by the fact the man is pointing the drill at his face, something you’re not supposed to do. But how does that AI know that?

Multimodal Models offer a promising approach to address this issue: connecting images and text. For example, Blip Models, while heavy on hardware, might solve this issue. We gave it a go, but failed to achieve useful results, even when leading the question.

| Model | Prompt | Result |

|---|---|---|

| blip2-opt-6.7b | Question: Why is this picture peculiar? Answer: | The man is holding a drill. |

| Question: Where is the man pointing the drill? Answer: | The man is pointing the drill at the wall. | |

| Question: Is the man doing something funny? Answer: | No, he is working on a drill. | |

| Question: Is the man pointing the drill to his face? Answer: | No, the man is pointing the drill to the wall. | |

| instructblip-flan-t5-xl | Question: Why is this picture peculiar? Answer: | The picture is peculiar because the man is wearing a hard hat. |

| The picture is strange because the man is doing something wrong. Why? | The picture is strange because the man is holding the drill incorrectly. | |

| Describe the image in full detail | The image features a man in a hard hat, holding a drill. He is wearing a safety helmet and is smiling, which suggests happiness. The drill is likely a tool used in the construction industry. | |

| blip2_vicuna7b_instruct | What is unusual about this image? | The image shows a man in a hard hat holding an electric drill. This suggests he is working in a potentially hazardous environment, like a factory or construction site. The hard hat is personal protective equipment (PPE) for head injury prevention, and the drill is for construction tasks. |

| What is funny about this image? | The humor lies in the man wearing a hard hat while holding an electric drill and smiling, creating a lighthearted scene. | |

| What is wrong with the man holding the drill? | There is no clear indication of anything wrong with the man or his situation. He appears to be using the drill as part of his job or task. |

Heuristic 5: Automatic Scoring

Even though two images might be valid, one might still be better than another. Why? Well, results will ultimately depend on the image's goal. For example, some ads work better for producing conversions than others. This phenomenon is hard to quantify because preference is hard to quantify. Asking experts would be impractical at scale, instead an automatic metric could get the job done.

We found Pickscore (refs: 1 2) to be a useful automated metric for this purpose. Pickscore is an AI model that predicts the preference that a human would have for one image over another in the most general possible aspects. In this way, we can use the Pickscore to rate the generated images according to their quality and select the best ones. In our experience, it helps to identify images that deviate from the original prompt and to choose more aesthetically pleasing ones.

Disclaimer: Pickscore, trained on people liking images, may not align with your goals. It gauges aesthetics, not expert preference. It worked for us, but its utility depends on your problem. Fine-tuning on your data is an option, but it needs a sizable dataset, which can be challenging.

Conclusion

In conclusion, this article offers valuable insights into addressing the challenges posed by unreliable generative AI, specifically focusing on image generation. The key takeaway is the need for businesses to adopt strategies that enhance the reliability of AI models.

The presented tips offer practical approaches to enhance image generation processes. Firstly, using fine-tuned models tailored to specific tasks (Tip 1) and employing Low-Rank Adaptation for small datasets (Tip 2) are referenced as effective methods. Furthermore, we suggest utilizing Text-To-Image models for precise control over outputs (Tip 3) and emphasizing in the most relevant regions within generated images through the use of saliency maps (Tip 4).

Last but not least, Tip 5 highlights a scoring and filtering mechanism to address various error types. The provided heuristics, such as analyzing face sizes and leveraging face detector models, offer practical ways to improve the overall quality of generated images using this technique. Recognizing the absence of a silver bullet in generative AI, we strongly encourage maintaining a creative and heuristic-driven approach tailored to specific use cases. Given the dynamic nature of the field and the continuous emergence of larger and better models, staying updated and thinking creatively are essential to bringing value to future model releases.